National Sovereignty Under Siege

The Voluntary Trap: How Denmark Repackaged Chat Control After Defeat

After Germany blocked the October vote, Europe’s surveillance proposal didn’t die—it evolved. Denmark’s November compromise claims to abandon mandatory scanning while preserving identical outcomes through legal sleight of hand. The repackaging reveals the essential dynamic: when democratic opposition defeats mass surveillance, proponents don’t accept defeat. They redraft terminology, shift articles, and reintroduce the same architecture under different labels until resistance exhausts itself.

The pattern is documented across five iterations. Sweden’s January-June 2023 presidency failed. Belgium couldn’t secure passage in June 2024. Hungary’s presidency ended December 31, 2024 without achieving agreement. Poland’s presidency collapsed in January-June 2025 when 16 pro-scanning states refused meaningful compromise. Each defeat produced not withdrawal but repackaging: “chat control” became “child sexual abuse regulation,” “scanning” became “detection orders,” “mandatory” became “risk mitigation,” and “breaking encryption” became “lawful access.” October’s blocking minority forced Denmark’s hand, but rather than accepting defeat, Justice Minister Peter Hummelgaard withdrew the proposal on October 31 and immediately began drafting version 2.0.

The Loophole Disguised as Compromise

Denmark’s November 5 revised text removes Articles 7-11’s “detection orders”—the language mandating scanning. Privacy advocates initially celebrated. Then legal experts read Article 4. The provision requires all communication providers implement “all appropriate risk mitigation measures” to prevent abuse on their platforms. Services classified as “high risk”—essentially any platform offering encryption, anonymity, or real-time communications—face obligations that experts argue constitute mandatory scanning without using the word “mandatory.”

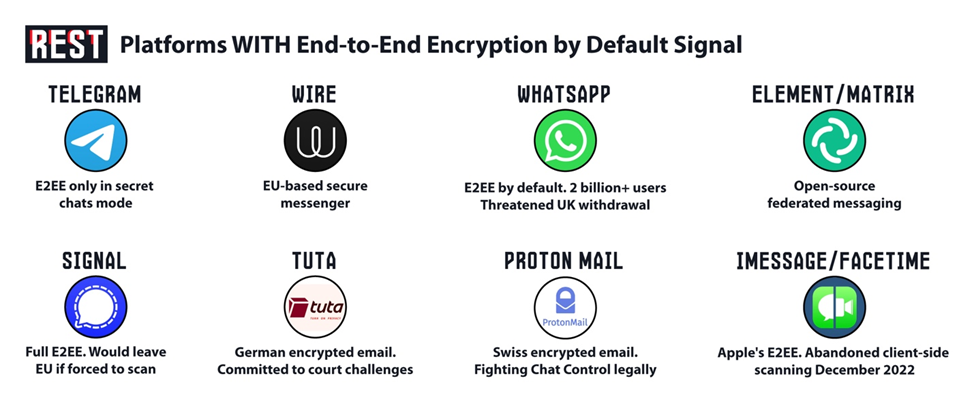

Patrick Breyer’s technical analysis identifies how the mechanism operates: “The loophole renders the much-praised removal of detection orders worthless and negates their supposed voluntary nature.” If encrypted messaging platforms like Signal, WhatsApp, or Telegram want to operate in the EU, they must demonstrate “appropriate” measures against abuse. What constitutes “appropriate” for a platform offering end-to-end encryption? The proposal’s logic suggests only one answer: scanning before encryption begins. Regulators determine appropriateness; platforms refusing face potential market exclusion. The choice becomes implement scanning or leave Europe—functionally identical to mandatory orders.

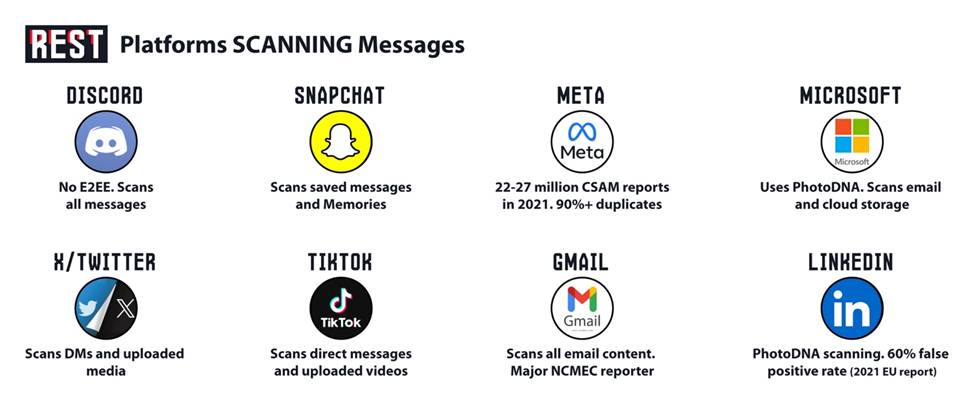

The EFF warns this creates permanent infrastructure for voluntary scanning that economic pressure converts to universal practice. Meta, Google, Microsoft already scan unencrypted content voluntarily—expanding to encrypted content requires only technical implementation, not legal change. But privacy-focused European alternatives like Proton, Tuta, and Wire cannot scan without destroying their security model. The regulation creates asymmetric compliance burdens favoring centralized US platforms over European competitors. Digital sovereignty rhetoric meets structural dependency.

Denmark’s text additionally extends scanning from images and videos to text messages and metadata. AI algorithms would analyze conversation patterns attempting to distinguish normal interaction from “grooming.” Research published in Scientific Reports found maximum grooming detection accuracy of 92%, but noted critical limitations: models cannot detect vulnerable victims reliably, demonstrate linguistic biases, and lack contextual understanding. Microsoft’s Project Artemis claimed 88% accuracy, but Microsoft itself warns against policy reliance on this figure, noting it relates to a small English-language dataset. Scaling to billions of multilingual messages produces what privacy advocates call “a digital witch hunt” where algorithms flag conversations based on keywords like “love” or “meet” without understanding context—sarcasm, flirting, therapeutic discussions, or legitimate family communication.

When Survivors Say No: Opposition From Unexpected Quarters

The most powerful challenge to Chat Control comes from those it claims to protect. Alexander Hanff, who survived childhood sexual abuse, testified: “I didn’t have confidential communications tools when I was raped; all my communications were monitored by my abusers.” The proposal would recreate his childhood surveillance state at societal scale. Brave Movement established a “Survivors Taskforce” pairing abuse survivors with MEPs, but investigative journalists revealed internal strategy documents describing “divide and conquer” tactics funded by Oak Foundation’s $24 million lobbying budget.

Off Limits, the Netherlands’ oldest child sexual abuse material hotline, opposes the proposal. Former Director Arda Gerkens invited Commissioner Johansson to visit but was refused, while Johansson “visited Silicon Valley and big North American groups.” Child protection organizations recognize that surveillance doesn’t prevent abuse—it identifies material already created. Prevention requires family support services, education, victim resources, and investigation capacity. Germany’s GFF details how the proposal criminalizes minors themselves: over 40% of investigations into possession involved schoolyard cases—including teenagers who failed to delete abusive content in WhatsApp groups and unintended criminalization of teachers and parents.

The age verification mandate demonstrates how child protection rhetoric produces child harm. Denmark’s text prohibits minors under 16 from using messaging platforms with chat functions—WhatsApp, Telegram, Snapchat, online gaming. The restriction ostensibly prevents grooming, but research demonstrates such measures are easily circumvented through VPNs while creating exclusion effects for vulnerable youth seeking help, teenagers, and those in abusive households. “Digital isolation instead of education, protection by exclusion instead of empowerment,” Breyer observes. The German Chaos Computer Club calculates perpetrators already use distribution methods unaffected by messaging platform scans—public file hosters, encrypted archives, darknet forums. The proposal targets the wrong infrastructure while harming the wrong population.

The Precedent That Legitimizes Global Authoritarianism

Europe’s decision shapes global norms. China already deploys client-side scanning at massive scale for political control—WeChat scans messages for content opposing the government, blocking transmission and flagging users. The EU proposal provides authoritarian regimes rhetorical legitimacy: “Even democratic Europe requires scanning for child protection—we merely extend it to additional threats.” India’s 2022 rules mandate platforms “identify” prohibited content, interpreted as scanning requirements. Australia’s eSafety Commissioner released draft standards on November 20, 2023 requiring proactive detection across email, messaging, and cloud storage—600+ organizations including Mozilla, Signal, and Proton opposed, but the proposal persists.

The UK’s Online Safety Act, passed October 2023, grants Ofcom power to require “accredited technology” for CSAM detection. The government admitted the technology “is not technically feasible” yet passed the law regardless, creating legal authority awaiting technical capability. Each jurisdiction pressures others through regulatory arbitrage—platforms facing multiple scanning mandates globally find compliance easier than fragmented resistance. The IAB warns this creates “single points of failure” and “enormous attack surfaces” exploitable by hostile actors. Building surveillance backdoors for legitimate law enforcement creates identical backdoors for criminal networks and foreign intelligence services.

The function creep trajectory is documented. Europol officials suggested in July 2022 extending detection to “other crime areas”—terrorism, drug trafficking, copyright infringement. The UK blocklist initially limited to CSAM and terrorism added copyright violations within years. USA PATRIOT Act “temporary” measures became permanent with expanding scope. The EU Data Retention Directive, declared invalid by the European Court of Justice in 2014 for violating fundamental rights, attempted resurrection through Chat Control’s architecture. Meta’s scanning has operated for over a decade—reports increased from approximately 16 million in 2019 to 22-27 million in 2021, representing a 38-69% increase, yet CSAM circulation has not decreased.

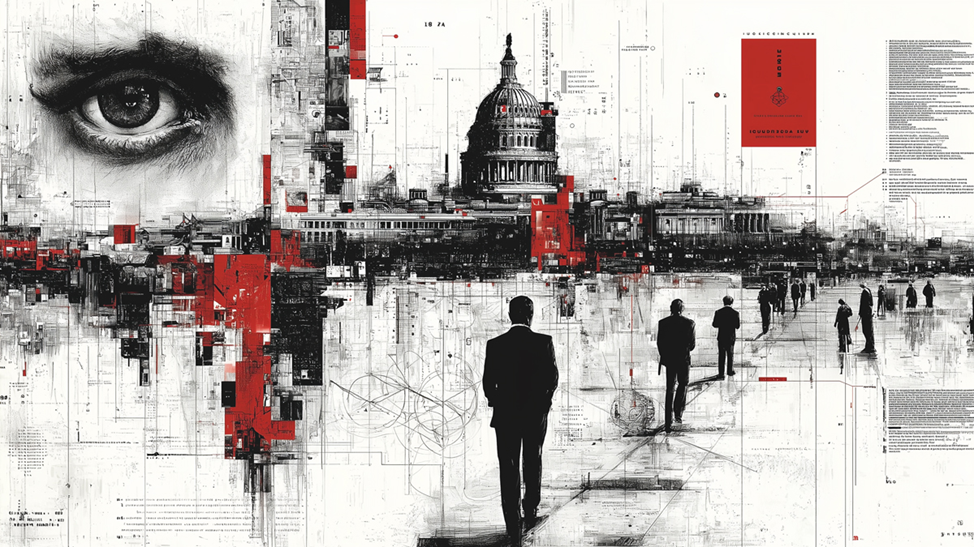

The Infrastructure That Cannot Be Unbuilt

The fundamental question is architectural: once client-side scanning infrastructure exists, who controls it? Denmark’s November proposal faces opposition from Germany, Luxembourg, and Poland, but the persistence across five failed iterations demonstrates permanent institutional pressure toward surveillance expansion. Commissioner Johansson’s term ended November 30, 2024, yet the proposal continues under new leadership—indicating the drive originates not from individual politicians but from structural incentives within law enforcement agencies, intelligence services, and commercial surveillance vendors.

The blocking minority must hold indefinitely. Supporters need win only once. Austria bound itself through parliamentary resolution in November 2022, preventing the government from agreeing without fundamental rights corrections. Czech Prime Minister Petr Fiala stated in August 2025: “We will not allow monitoring of citizens’ private correspondence.” But parliamentary elections, coalition changes, and rotating Council presidencies create opportunities for renewed pressure. Denmark’s current presidency extends through December 2025—multiple vote attempts remain possible before handoff to the next rotating presidency in January 2026.

The choice Europe faces is binary: communications remain private by technical design or become transparent to state monitoring by mandatory implementation. Client-side scanning either exists or doesn’t. Encryption either protects or theater replaces protection. Over 500 cryptography experts from 34 countries confirm the technology is “technically infeasible” with “unacceptably high false positive rates.” The Council’s Legal Service ruled client-side scanning “highly probably” constitutes illegal mass surveillance likely annulled by courts. Yet technical impossibility and legal invalidity haven’t prevented political advancement—they’ve produced iterative repackaging until exhaustion or capture enables passage.

The infrastructure, once built, serves whoever controls it. In a continent that remembers what surveillance states accomplish, this requires no explanation. The machine that sees everything awaits construction, and the vote approaches again. Whether Europe builds that machine or definitively rejects it determines not just European digital rights but global precedent for decades. The zombie persistence of Chat Control across repeated defeats suggests the battle is permanent until either decisive rejection or incremental acceptance normalizes the capabilities it requires.